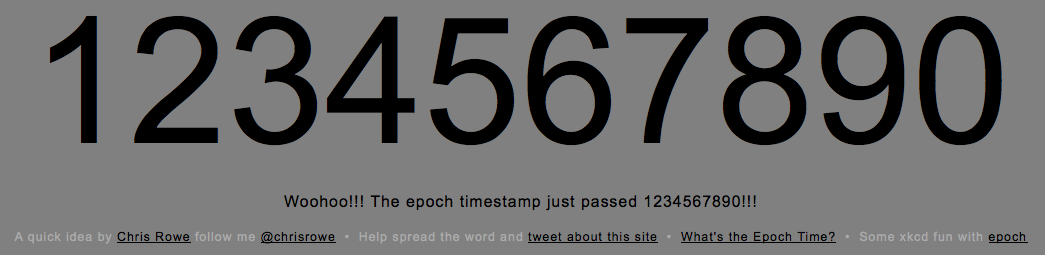

Today I witnessed history – Unix history, that is. At 3:31:30PM today, the Unix epoch timestamp passed the 1234567890 mark.

So, what?

Essentially, computer time starts on January 1st, 1970 at midnight. We call this time zero (represented by 64 zeroes in binary) and count up from there. Ever since the beginning of 1970, this little 32-bit integer has been counting upwards (in seconds) to keep track of time in computer systems everywhere. Servers, phones, traffic systems, planes, and tons of other electronic devices all use Unix time. Unix time isn’t just for the Unix operating system.

That’s why it’s so awesome that all these electronic devices shared the same numerically beautiful timestamp - 1234567890 - for a brief second during today. Parties to celebrate the event were held by computer science enthusiasts (also known as geeks) all around the world today.

Lots of Stanford CS students celebrated too. Eric Roberts, the professor for CS106A (the class that I section lead), made his entire intro CS class watch the final minutes of the countdown. He made sure to explain Unix time for students who had never heard of it before.

I followed the time from http://coolepochcountdown.com/ which provided a nice countdown timer and firework effects once the time reached 1234567890 – something I wasn’t really expecting.

The next Y2K scare?

Although today’s event was fun, it brings to light the question of what will happen when Unix clock runs out of seconds to count? What happens when the time reaches the 32-bit limit: 2147483647 (represented by 64 ones in binary) and rolls over to -2147483648, buffer overflow style? Computers will suddenly think the date is December 13, 1901 at 8:45:52 PM! This event is scheduled to happen on 3:14:07 AM on Tuesday, 19 January 2038, so this is a real and immediate concern.

Will society fall into chaos and computer systems cease to function (as the Y2K bug was predicted to do)? Or will the effects be much less profound, just causing annoyance (incorrect date on your desktop) but no devastation?

The more important question to be asking is: when will we all be using 64-bit processors? After all, 64-bit systems track time with a 64-bit integer allowing us to count back 20 times the age of the universe, and around 293 billion years into the future.

64-bit would solve this problem once and for all. At least until we run out of numbers in 64-bit, in 293 billion years.

(If you liked this, you might like Travels in Japan.)